08 Oct The Rise of AI in Recruiting: What It Means for Your HR Team

Beyond the Buzz: Hiring in an Age of AI-Polished Resumes

Artificial Intelligence is reshaping recruitment in the United States at breakneck speed, but not always in the ways you’d expect. While companies race to adopt AI recruiting tools, a parallel revolution is happening on the candidate side: job seekers are using the same technology to game the system.

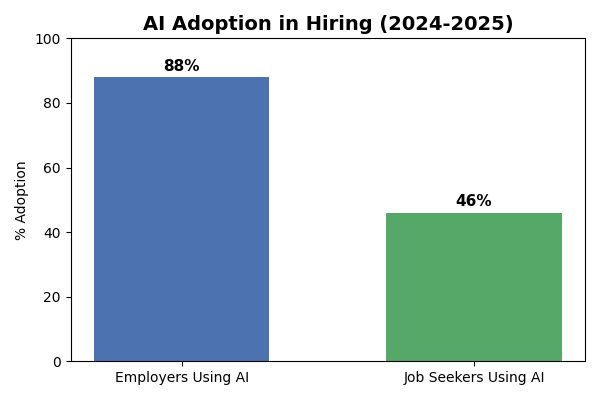

More than 80% of companies in the United States now use some form of Artificial Intelligence in hiring, and nearly half of all job seekers report using AI to enhance or even fully generate their resumes [1, 2]. For HR teams, staffing firms, and senior leadership tasked with filling high-impact roles, this creates a perfect storm: AI-enhanced resumes flooding applicant funnels, keyword-driven platforms that can be easily manipulated, and evaluation frameworks struggling to separate genuine professional experience from artificially polished applications.

This shift is particularly pronounced in competitive markets like New York City, where Digital Media, Customer Data Science, and Backend Engineer positions attract hundreds of AI-optimized applications. For talent acquisition leaders and staffing agencies navigating this landscape, the question isn’t whether to use AI, it’s how to use it without losing sight of what truly matters: finding candidates who can deliver results.

Key Takeaways

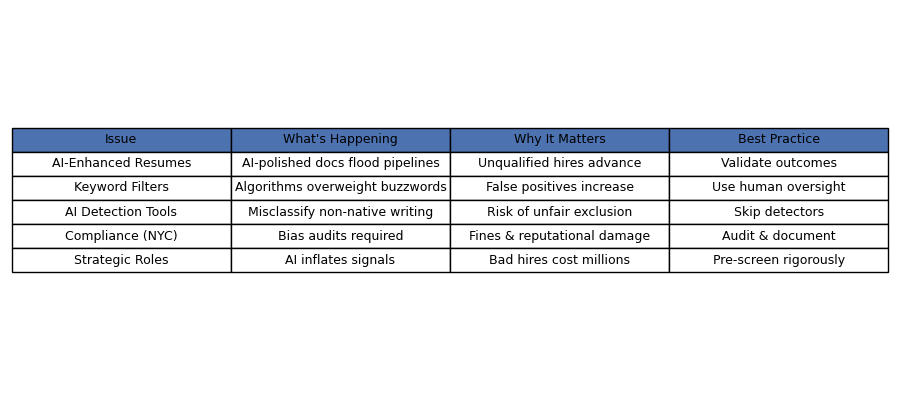

| Issue | What’s Happening | Why It Matters | Best Practice |

|---|---|---|---|

| AI-Enhanced Resumes | Job seekers increasingly use AI tools to polish or generate resumes [1]. | Keyword filters may surface unqualified candidates. | Validate outcomes and professional experience, not just phrasing. |

| Keyword/LLM Filters | Algorithms overweight buzzwords and can be gamed [7]. | False positives reduce efficiency in applicant funnels. | Use structured evaluation frameworks and human oversight. |

| AI Detection Tools | Detectors misclassify non-native writing and are unreliable [12]. | Risk of unfairly excluding candidates. | Skip detectors: focus on verified capabilities. |

| Compliance | NYC AEDT law requires bias audits and candidate notices [10]. | Non-compliance risks fines and reputational damage. | Audit tools, document processes, and center human review. |

| Strategic Roles | AI inflates signals but can’t prove outcomes. | Leadership hires demand proven high-impact results. | Pre-screen rigorously for track record and context. |

The New Reality: When Everyone’s Resume Looks Perfect

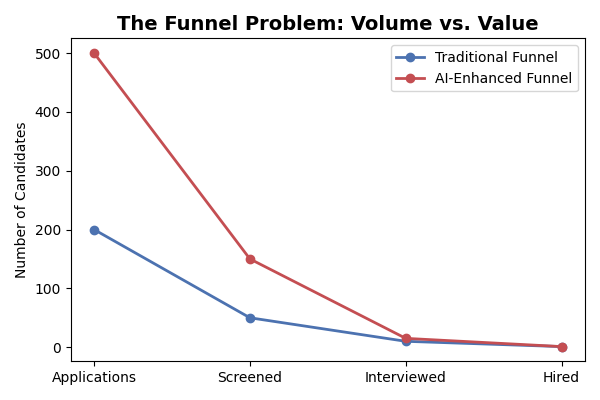

Recruitment has always been about balancing scale with precision. Artificial Intelligence tips that balance in unpredictable ways. While AI recruiting tools enable massive funnel optimization, automating outreach, screening thousands of resumes, and scheduling interviews, they also inflate the top of the funnel with applications that look more qualified than they actually are.

According to Canva’s 2025 study, candidates who used AI in their job applications were significantly more likely to secure interviews [1]. ResumeBuilder’s survey reveals just how widespread this practice has become: 46% of job seekers in the United States admitted they relied on AI tools like ChatGPT for resumes or cover letters [3].

For HR teams and staffing agencies, this translates to more volume, but not necessarily more value. A Backend Engineer candidate might have a perfectly optimized resume filled with buzzwords like “scalable microservices architecture” and “distributed systems expertise,” but that polished document reveals nothing about whether they’ve actually built and maintained systems under pressure. Professional experience, cultural alignment, and execution ability simply can’t be verified by AI-polished documents alone.

The Democratization Problem

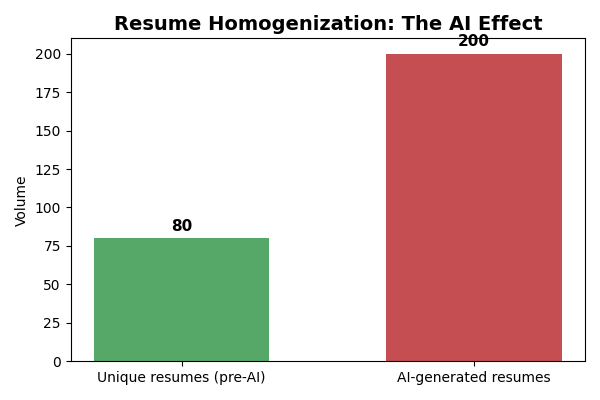

Artificial Intelligence has lowered the barrier to entry in ways both empowering and problematic. A job seeker can now generate a keyword-optimized resume tailored to a Customer Data Science or Digital Media role in minutes. Tools even suggest “high-impact role” phrasing specifically designed to catch recruiter attention.

This democratizes access to opportunities, a genuine benefit for candidates who struggle with self-presentation. But it also creates homogenization. When AI tools optimize every resume using the same algorithms and keyword databases, resumes begin to look and sound eerily alike. For recruiters relying on automated evaluation frameworks, the noise-to-signal ratio worsens dramatically.

Staffing firms, particularly those operating in competitive markets like New York, need sophisticated strategies to differentiate surface polish from true competency. This is where human expertise becomes essential.

AI Adoption in the United States: National Trends Meet NYC Regulations

Nationally, AI adoption in hiring is accelerating at a remarkable pace. SHRM’s 2024 Talent Trends survey of 2,366 HR professionals reported that 88% of organizations use Artificial Intelligence to support recruitment [4]. The technology promises efficiency gains that are hard to ignore: faster resume screening, reduced time-to-hire, and the ability to process thousands of applications that would overwhelm human recruiters.

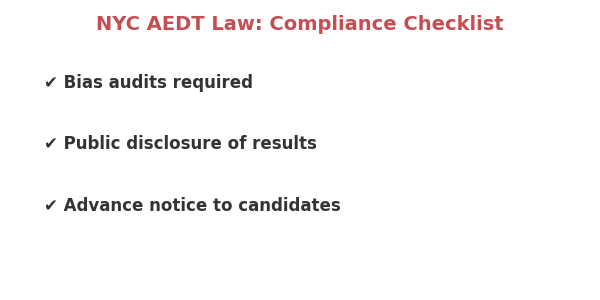

However, New York City represents a different landscape entirely. Local Law 144, governing Automated Employment Decision Tools (AEDT), adds layers of compliance that make NYC unique in the United States [10]. While companies nationwide optimize purely for efficiency, NYC recruiters must simultaneously optimize for compliance, fairness, and transparency.

NYC Compliance Reality: Organizations using AI recruiting tools in New York City must conduct bias audits, publicly post audit results, and provide advance notice to candidates when automated systems influence hiring decisions. This isn’t optional; it’s the law.

For staffing agencies operating in NYC’s Digital Media, analytics, and technology sectors, this regulatory environment demands a more thoughtful approach. The evaluation frameworks that work elsewhere may not satisfy NYC’s requirements, making local expertise and compliance knowledge invaluable.

Where AI Tools Excel; and Where They Create Blind Spots

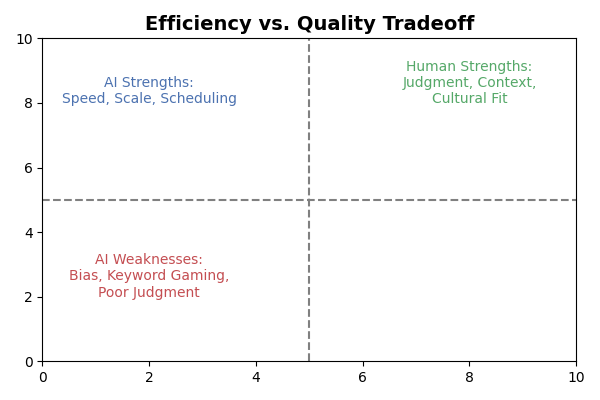

Artificial Intelligence genuinely shines in logistics and funnel optimization. Parsing thousands of resumes, scheduling interviews across time zones, triaging large candidate pools, these are tasks where automation delivers clear value. For high-volume roles, AI recruiting tools can reduce time-to-hire from weeks to days.

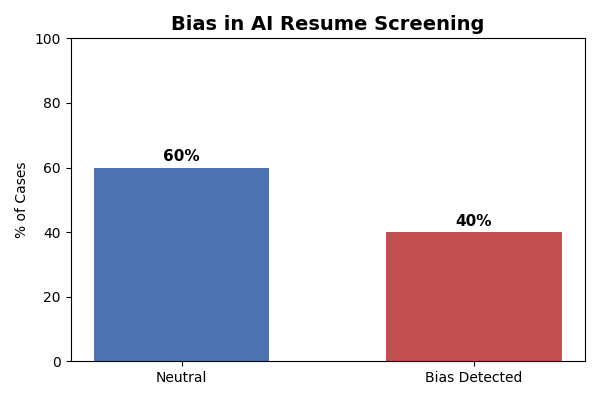

But for positions demanding judgment, creativity, or senior leadership capabilities, over-reliance on AI creates significant risks. University of Washington researchers found that large language models introduced measurable gender and racial biases when ranking resumes [6]. The Brookings Institution highlights how these systemic inequities compound when AI tools replace human judgment in resume screening [9].

The High-Stakes Problem for Senior Leadership

When you’re hiring for a high-impact role, a senior leadership position, a specialized Backend Engineer role, or a strategic Customer Data Science lead, the stakes multiply exponentially. A poor hiring decision at the executive level can cost millions, erode organizational culture, and damage investor confidence.

AI-enhanced resumes inflate candidate signals in ways that are particularly dangerous for these positions. The technology can make someone look like a transformational leader on paper, but it can’t demonstrate actual leadership under pressure, strategic decision-making in ambiguous situations, or cultural alignment with organizational values. That’s why evaluation frameworks for high-impact roles must emphasize proven professional experience and outcome validation, not resume aesthetics.

This is precisely where specialized staffing agencies add value that pure technology cannot replicate. The ability to verify track records, understand industry context, and assess candidates beyond their self-presentation requires human expertise and deep industry connections.

The Keyword Trap: How Evaluation Frameworks Are Being Gamed

Keyword-based matching (the backbone of many AI recruiting tools) is surprisingly easy to manipulate. Academic research has documented sophisticated “fake resume” attacks where adversarial data poisons automated hiring platforms [15]. But you don’t need to be a data scientist to game these systems. Job seekers quickly learn which keywords trigger positive responses and adjust accordingly.

The consequences cascade through your hiring funnel:

False positives: unqualified candidates advance because they’ve mastered keyword optimization. A candidate might list “multimodal models” and “machine learning pipelines” without understanding the underlying concepts, yet sail through initial screening.

False negatives: strong but unconventional candidates get filtered out because they don’t use the exact terminology your algorithms expect. Someone with proven professional experience might describe their accomplishments using different language than what’s in your keyword database.

Staffing agencies and HR teams need to evolve their evaluation frameworks beyond simple keyword matching. This means implementing structured scorecards, behavioral interview questions, and calibrated rubrics that resist keyword inflation. It means validating not just what candidates claim, but what they’ve actually accomplished.

Why Candidate Experience Still Demands the Human Touch

While AI recruiting tools can speed up processes, candidate experience ultimately hinges on human connection. SHRM reports that HR leaders increasingly recognize that Artificial Intelligence amplifies rather than replaces the need for human judgment [5].

For candidates navigating competitive NYC industries like Digital Media and Analytics, being assessed fairly and contextually is critical. Job seekers can tell when they’re being evaluated by an algorithm versus engaged with by an actual human who understands their field. This distinction affects not just whether they accept an offer, but whether they recommend your organization to others in their network.

Verifying professional experience through structured references, outcome-based screening, and contextual conversations improves quality-of-hire as well as enhancing the entire candidate journey. In tight talent markets, this competitive advantage matters enormously.

NYC’s AEDT Law: Legal Guardrails You Cannot Ignore

New York City’s Local Law 144 represents one of the first comprehensive regulations governing AI in hiring in the United States. The requirements are specific and enforceable [10, 11]:

Bias audits must be conducted on automated employment decision tools within one year of use, with methodology and results that meet statutory standards.

Public disclosure of audit results must be posted where candidates can access them, demonstrating transparency in how AI recruiting tools make decisions.

Advance notice to candidates when AEDTs influence hiring or promotion decisions, giving applicants visibility into the process.

For staffing agencies operating in New York, compliance is a strategic imperative. Failing to adhere risks substantial fines, potential lawsuits, and reputational damage in a highly scrutinized market. The investment in proper evaluation frameworks and documentation processes pays for itself many times over by avoiding these risks.

Balancing Efficiency with Quality for High-Impact Roles

Here’s the central tension: Artificial Intelligence reduces time-to-hire for high-volume positions, but senior leadership and technical specialist roles require fundamentally different approaches. You can’t hire a Backend Engineer the same way you’d hire entry-level customer service representatives. The cost of a wrong hire scales dramatically with role seniority and technical complexity.

This balance becomes especially crucial in industries where New York City excels: finance, Customer Data Science, Digital Media and Analytics, and specialized technology roles. A Backend Engineer who looked perfect on paper but can’t actually architect scalable systems will cost you months of lost productivity and team morale.

Smart organizations recognize that funnel optimization isn’t about processing more candidates faster, it’s about ensuring the candidates who advance are genuinely aligned with role requirements and organizational needs. This distinction separates effective hiring from merely efficient hiring.

How Staffing Agencies Filter AI Noise from Real Talent

This is where specialized staffing agencies create measurable value. By acting as a strategic buffer between your organization and the flood of AI-enhanced applications, experienced staffing firms filter out artificial polish and confirm genuine professional experience before candidates ever reach your interview stage.

Consider what this means in practice: instead of your HR team wading through 500 AI-optimized resumes for a Backend Engineer position, a staffing agency with deep industry connections pre-qualifies candidates based on verified outcomes, technical assessments, and reference checks. You interview five genuinely qualified candidates instead of fifty who simply mastered keyword optimization.

This is true funnel optimization, not just moving candidates through stages faster, but ensuring each stage adds real signal about candidate quality. For organizations competing for talent in Digital Media, analytics, and technology, this efficiency translates directly to competitive advantage.

The Summit Staffing Partners Approach

At Summit Staffing Partners, we’ve built our process around a fundamental insight: Artificial Intelligence is a powerful tool, but it’s not a replacement for human expertise and industry relationships. Our evaluation frameworks combine the efficiency of modern technology with the discernment that only comes from years of specialized recruiting experience.

We don’t just match keywords, we validate professional experience. We don’t just screen resumes, we verify outcomes. And we don’t just fill positions, we build relationships that help our clients make high-impact hires with confidence.

Our team understands New York City’s unique compliance landscape, maintains deep connections across Digital Media, analytics, and technology sectors, and brings structured evaluation frameworks that resist the AI-enhanced noise flooding applicant funnels. When you’re hiring for roles where getting it right matters, senior leadership positions, specialized technical roles, strategic hires that will shape your organization, that combination of technology and human expertise makes all the difference.

The Next Wave: Multimodal Models and Emerging AI Recruiting Tools

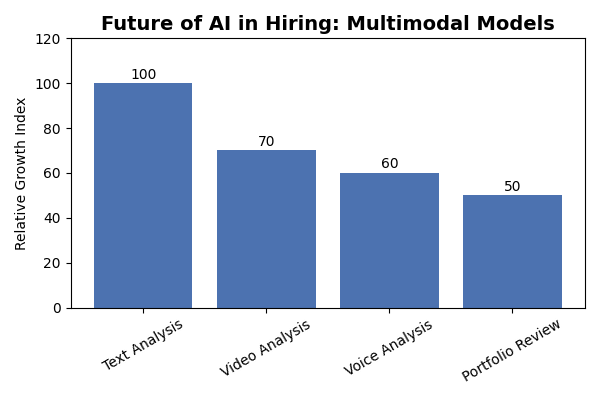

The future of AI in recruitment isn’t limited to text analysis. Multimodal models can now analyze video interviews, evaluate voice patterns, and assess digital portfolios across multiple formats simultaneously. These capabilities promise even greater efficiency and insights.

But they also raise new questions about bias, transparency, and legal compliance. A tool that analyzes facial expressions or speech patterns during video interviews could inadvertently discriminate based on cultural communication styles, disabilities, or neurodivergence. Senior leadership teams need to approach these emerging technologies with both enthusiasm and caution.

What looks like a cutting-edge solution can quickly become a liability without rigorous evaluation frameworks and human oversight. The lesson from AI-enhanced resumes applies equally to multimodal models: technology amplifies both opportunities and risks, and the organizations that succeed will be those that maintain human judgment at the center of their hiring processes.

Building Trust in an AI-Driven Recruiting Landscape

Artificial Intelligence isn’t disappearing from recruitment, nor should it. The technology delivers genuine value when used thoughtfully. But its blind spots are equally real, and they’re consequential.

For HR leaders and staffing agencies in New York City and across the United States, success requires a balanced approach: embracing automation where it adds value while maintaining human oversight where it matters most. This means updating evaluation frameworks to resist keyword gaming, implementing structured assessments that verify professional experience, and centering candidate experience even as processes become more efficient.

Organizations that get this balance right will reduce time-to-hire, improve hiring outcomes, and build diverse, high-performing teams… even in markets crowded with AI-polished resumes. Those that don’t risk drowning in volume while missing the talent they actually need.

The question isn’t whether to use AI recruiting tools. It’s whether you have the expertise, evaluation frameworks, and human judgment necessary to use them well. And for many organizations, the answer is partnering with staffing agencies that have already solved these challenges, turning AI’s efficiency into genuine competitive advantage while avoiding its pitfalls.

Ready to cut through the noise? If you’re tired of AI-enhanced resumes that look impressive but deliver disappointment, Summit Staffing Partners offers a done-for-you solution. We handle the complexity of modern recruiting, from NYC compliance to candidate pre-qualification, so you can focus on building your team with confidence. Let’s talk about your hiring needs.

References

- Business Wire. “Designing Your Dream Job: Canva Study Reveals AI and Visuals Are Transforming the Job Hunt.” 2025. Link

- Canva Newsroom. “New Year, New Job: Our Playbook for Standing Out in 2025.” Link

- ResumeBuilder. “3 in 4 Job Seekers Who Used ChatGPT to Write Their Resume Got an Interview.” Link

- SHRM. “The Role of AI in HR Continues to Expand.” Link

- SHRM (PDF). “From Adoption to Empowerment: Shaping the AI-Driven Workforce of Tomorrow.” Link

- University of Washington News. “AI tools show biases in ranking job applicants’ names according to race and gender.” 2024. Link

- AAAI AIES Proceedings. “Gender, Race, and Intersectional Bias in Resume Screening via Language Model Retrieval.” 2024. Link

- arXiv. “Gender, Race, and Intersectional Bias in Resume Screening via Language Model Retrieval.” Link

- Brookings. “Gender, race, and intersectional bias in AI resume screening via language model retrieval.” 2025. Link

- NYC Department of Consumer and Worker Protection. “Automated Employment Decision Tools (AEDT).” Link

- NYC DCWP (PDF). “Automated Employment Decision Tools: FAQs.” Link

- Stanford HAI. “AI-Detectors Biased Against Non-Native English Writers.” Link

- ScienceDirect. “GPT detectors are biased against non-native English writers.” Link

- arXiv. “Fake Resume Attacks: Data Poisoning on Online Job Platforms.” 2024. Link

Sorry, the comment form is closed at this time.